Guangfu Ma

A Cascaded Information Interaction Network for Precise Image Segmentation

Jan 02, 2026Abstract:Visual perception plays a pivotal role in enabling autonomous behavior, offering a cost-effective and efficient alternative to complex multi-sensor systems. However, robust segmentation remains a challenge in complex scenarios. To address this, this paper proposes a cascaded convolutional neural network integrated with a novel Global Information Guidance Module. This module is designed to effectively fuse low-level texture details with high-level semantic features across multiple layers, thereby overcoming the inherent limitations of single-scale feature extraction. This architectural innovation significantly enhances segmentation accuracy, particularly in visually cluttered or blurred environments where traditional methods often fail. Experimental evaluations on benchmark image segmentation datasets demonstrate that the proposed framework achieves superior precision, outperforming existing state-of-the-art methods. The results highlight the effectiveness of the approach and its promising potential for deployment in practical robotic applications.

Cascaded Interaction with Eroded Deep Supervision for Salient Object Detection

Nov 30, 2023

Abstract:Deep convolutional neural networks have been widely applied in salient object detection and have achieved remarkable results in this field. However, existing models suffer from information distortion caused by interpolation during up-sampling and down-sampling. In response to this drawback, this article starts from two directions in the network: feature and label. On the one hand, a novel cascaded interaction network with a guidance module named global-local aligned attention (GAA) is designed to reduce the negative impact of interpolation on the feature side. On the other hand, a deep supervision strategy based on edge erosion is proposed to reduce the negative guidance of label interpolation on lateral output. Extensive experiments on five popular datasets demonstrate the superiority of our method.

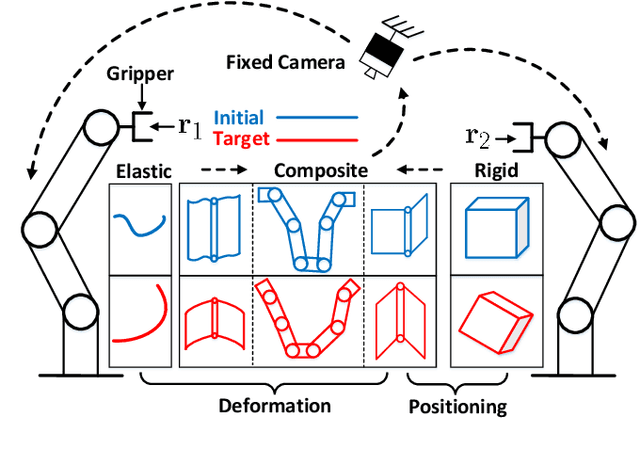

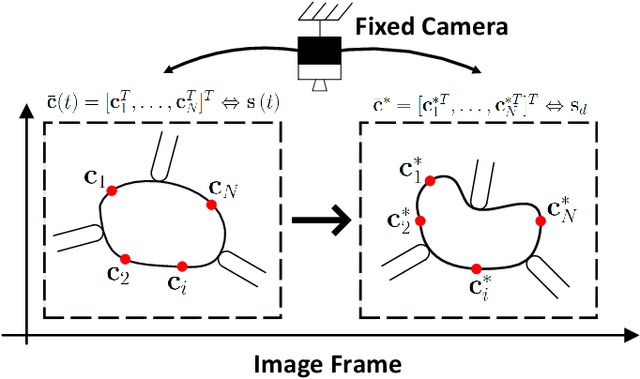

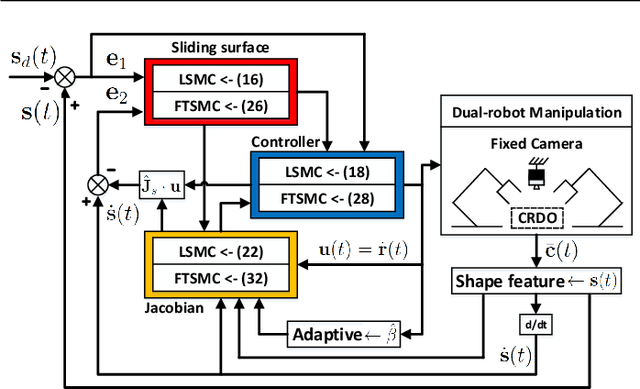

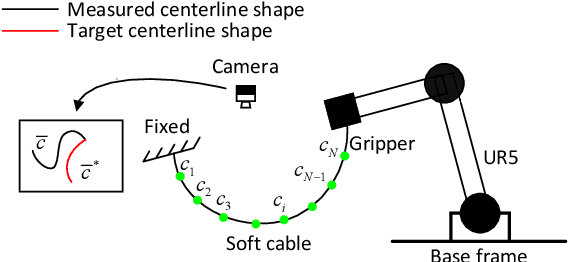

Contour Moments Based Manipulation of Composite Rigid-Deformable Objects with Finite Time Model Estimation and Shape/Position Control

Jun 04, 2021

Abstract:The robotic manipulation of composite rigid-deformable objects (i.e. those with mixed non-homogeneous stiffness properties) is a challenging problem with clear practical applications that, despite the recent progress in the field, it has not been sufficiently studied in the literature. To deal with this issue, in this paper we propose a new visual servoing method that has the capability to manipulate this broad class of objects (which varies from soft to rigid) with the same adaptive strategy. To quantify the object's infinite-dimensional configuration, our new approach computes a compact feedback vector of 2D contour moments features. A sliding mode control scheme is then designed to simultaneously ensure the finite-time convergence of both the feedback shape error and the model estimation error. The stability of the proposed framework (including the boundedness of all the signals) is rigorously proved with Lyapunov theory. Detailed simulations and experiments are presented to validate the effectiveness of the proposed approach. To the best of the author's knowledge, this is the first time that contour moments along with finite-time control have been used to solve this difficult manipulation problem.

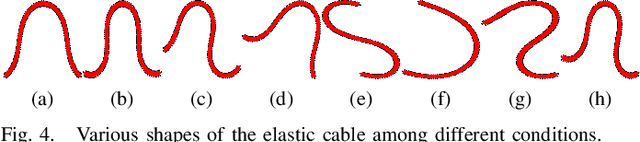

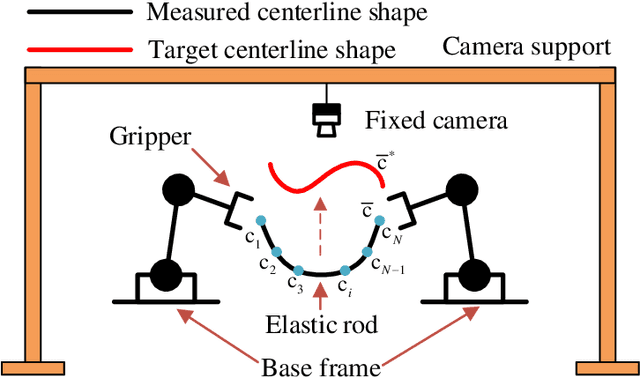

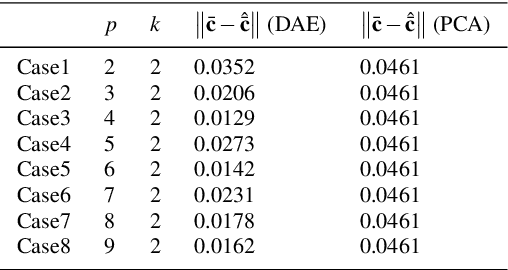

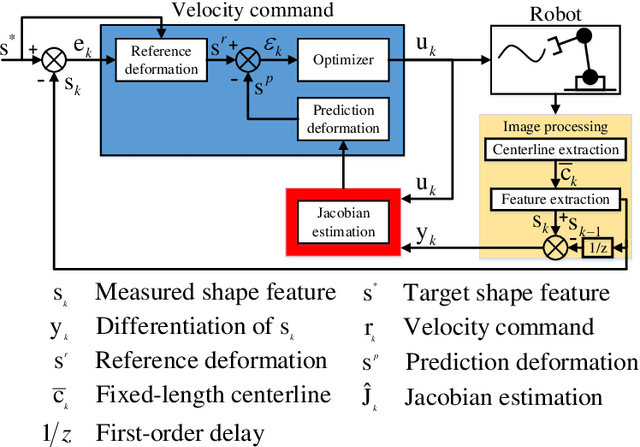

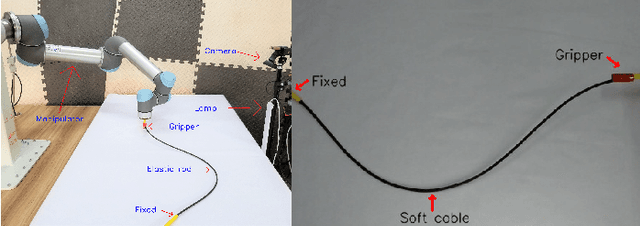

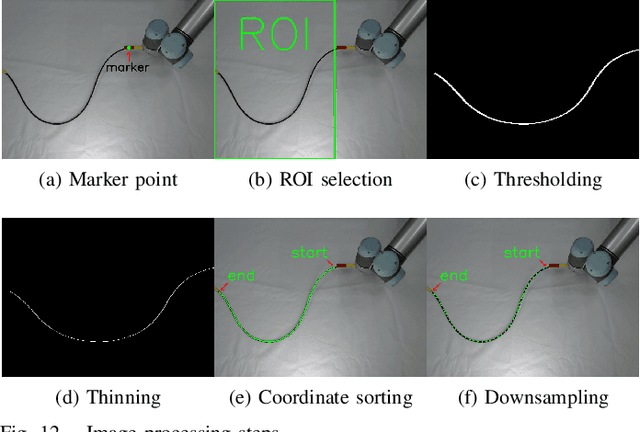

Towards Latent Space Based Manipulation of Elastic Rods using Autoencoder Models and Robust Centerline Extractions

Feb 09, 2021

Abstract:The automatic shape control of deformable objects is a challenging (and currently hot) manipulation problem due to their high-dimensional geometric features and complex physical properties. In this study, a new methodology to manipulate elastic rods automatically into 2D desired shapes is presented. An efficient vision-based controller that uses a deep autoencoder network is designed to compute a compact representation of the object's infinite-dimensional shape. An online algorithm that approximates the sensorimotor mapping between the robot's configuration and the object's shape features is used to deal with the latter's (typically unknown) mechanical properties. The proposed approach computes the rod's centerline from raw visual data in real-time by introducing an adaptive algorithm on the basis of a self-organizing network. Its effectiveness is thoroughly validated with simulations and experiments.

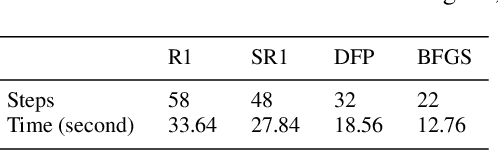

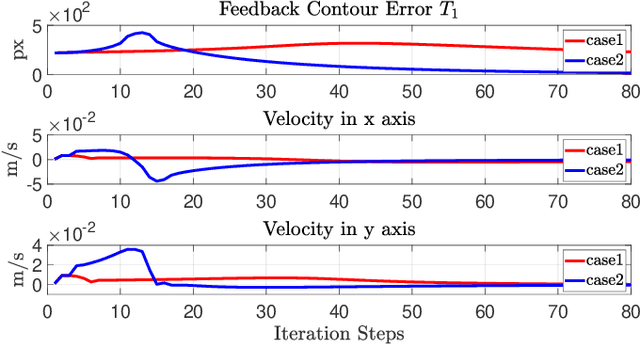

Adaptive Shape Servoing of Elastic Rods using Parameterized Regression Features and Auto-Tuning Motion Controls

Aug 16, 2020

Abstract:In this paper, we present a new vision-based method to control the shape of elastic rods with robot manipulators. Our new method computes parameterized regression features from online sensor measurements that enable to automatically quantify the object's configuration and establish an explicit shape servo-loop. To automatically deform the rod into a desired shape, our adaptive controller iteratively estimates the differential transformation between the robot's motion and the relative shape changes; This valuable capability allows to effectively manipulate objects with unknown mechanical models. An auto-tuning algorithm is introduced to adjust the robot's shaping motion in real-time based on optimal performance criteria. To validate the proposed theory, we present a detailed numerical and experimental study with vision-guided robotic manipulators.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge